How to Build a Real-World AI Product with Transformers (LLMs) — From Scratch

A step-by-step technical blueprint for building domain-specific AI products with Transformers, covering dataset preparation, embeddings, architecture, training, and generation techniques.

🚀 How to Build a Real-World AI Product with Transformers (LLMs) — From Scratch

Everyone is talking about AI, but how do you actually build an AI product from the ground up, tailored to a specific domain?

Here’s a complete technical blueprint, inspired by my experience building projects like a Custom AI Poetry Generator using GPT-2 architecture from scratch.

✅ Step 1: Define Your Problem Statement

1. Identify the exact task: Text generation? Summarization? Domain-specific Q&A?

2. Define the domain scope (e.g., poetry, medical reports, legal documents).

✅ Step 2: Curate and Prepare Your Dataset

1. Collect high-quality domain data (structured or unstructured).

2. Clean and preprocess the data.

3. Apply tokenization:

- Byte Pair Encoding (BPE)* or *WordPiece for subword tokenization

- Special tokens: [BOS], [EOS], [PAD] for sequence boundaries and padding

✅ Step 3: Embedding Representations

Before feeding tokens into the model:

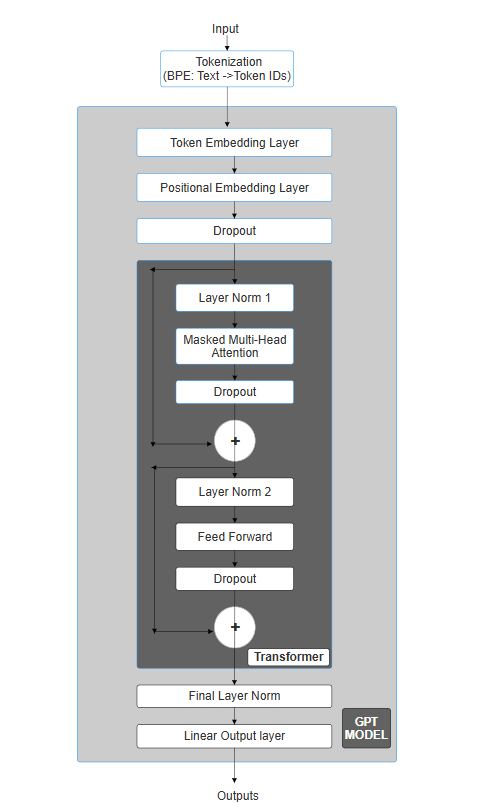

✅ Step 4: Transformer Architecture – Building the Brain

Implement a stack of Transformer Decoder blocks, each with:

1. Multi-Head Self-Attention – lets the model focus on different parts of the sequence

2. Feed Forward Network – deeper learning per token

3. Residual Connections & Layer Normalization – stabilizes training and improves gradient flow

💡 In my poetry generator, I implemented a GPT-2-like Transformer Decoder architecture from scratch in PyTorch.

✅ Step 5: Training & Fine-Tuning

✅ Step 6: Generation Techniques

For producing meaningful and diverse outputs:

📌 Key Takeaways

💬 Let’s Connect

If you’re building or planning to build your own AI models, let’s connect!

🔖 Hashtags

#GenerativeAI #LLM #DeepLearning #TransformerModels #AIDevelopment #ProductEngineering #MLPipeline